keras整体来说是一个非常简单易用的框架,搭建一个网络非常地快捷和方便,这篇博客主要是记录之前用keras搭建的一个cnn识别验证码的步骤。

数据生成

首先用ImageCaptcha这个库来生成验证码,这里有两种生成的方式,一种是直接生成很多张,放在电脑中,然后每次读一部分进来进行训练,还有一种方式就是用生成器的方式来生成数据,然后用keras model的fit_generator方法进行训练。

1 | from captcha.image import ImageCaptcha |

网络构建

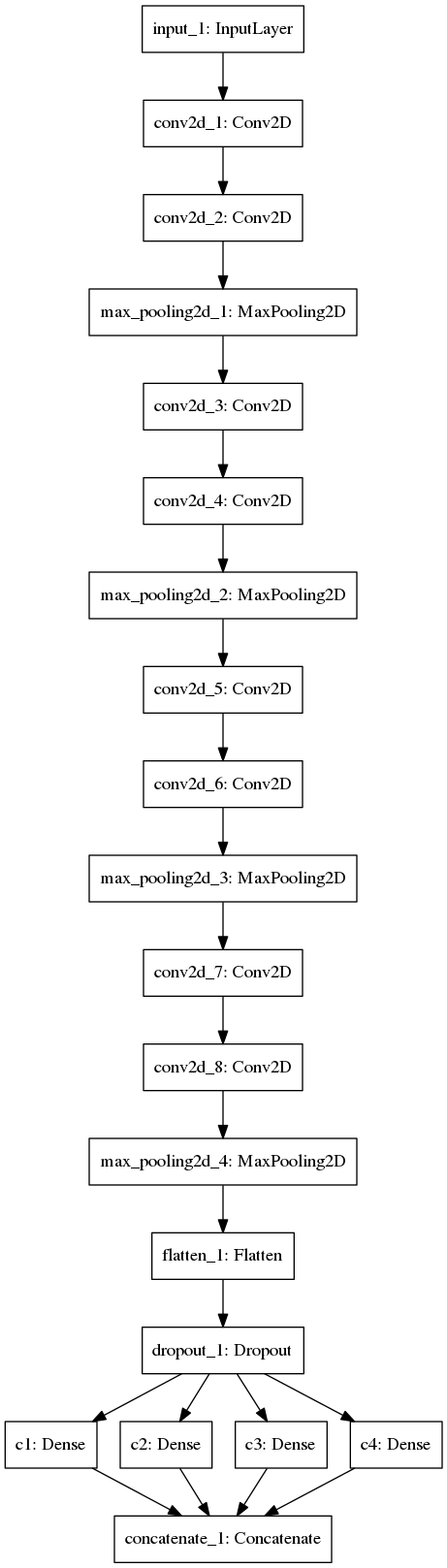

构建一个类似于vgg16的网络

1 | from keras.preprocessing.image import img_to_array,load_img |

我们来看看模型的结构,画出模型结构图,在使用plot_model之前要先pip install pydot-ng & apt install graphviz

1 | from keras.utils import plot_model |

1 | model.summary() |

定义loss和acc

这里的loss不能用系统自带的几个loss和acc,因为我们这里是分4个字符的验证码,不是一个分对了就是对,而是四个都对了才是对

1 | tf_session = K.get_session() |

至此,模型训练结束,你可以生成更多的数据来训练一次达到更高的模型精度

测试模型精度

1 | def decode_str(result): |

问题解决

一开始随便搭建了一个conv加dense层组合的网络,效果很差,所以还是要用一些成熟的网络。还有一个问题就是loss不下降,这个问题的原因是一开始用的adam optimizer,step设置得太小了,后来用了adadelta,step的初始值就是1,这样下降很快,效果提升就比较明显。